Batch processing, a powerful technique for streamlining workflows, offers significant advantages across various industries. This guide delves into the intricacies of batching tasks, from defining the concept to implementing practical strategies. Understanding how to effectively batch tasks can lead to substantial gains in efficiency and productivity.

This in-depth exploration covers crucial aspects, including identifying suitable tasks, implementing various batching strategies, and leveraging appropriate tools and technologies. We’ll also discuss optimization, error handling, and security considerations to ensure smooth and robust batch processes.

Defining Batching

Batch processing is a method of handling tasks by grouping similar operations together for execution in a single run. This approach contrasts with individual task processing, where each task is executed independently. The core principle is to optimize efficiency by consolidating work into larger, more manageable units.The core concept behind batch processing is the grouping of similar tasks for execution.

Instead of processing each task one at a time, related tasks are collected and processed as a single unit. This aggregation allows for the streamlined use of resources, leading to greater efficiency and reduced processing time. For instance, instead of printing each document individually, a batch print job can print multiple documents simultaneously.

Benefits of Batch Processing

Batch processing offers several advantages across various contexts. Improved resource utilization is a key benefit. By grouping tasks, systems can optimize the use of memory, CPU time, and network bandwidth. This leads to reduced processing time and potentially lower costs. Another key benefit is the potential for improved data integrity.

When tasks are processed in batches, there’s a greater opportunity for comprehensive validation and error detection before the batch is completed. This can prevent errors from propagating throughout a system. Furthermore, batch processing is frequently employed for tasks requiring large datasets or substantial computational power. This allows for more efficient handling of substantial data volumes.

Advantages and Disadvantages of Batch Processing

| Advantages | Disadvantages |

|---|---|

| Improved resource utilization (e.g., CPU, memory, network). | Potential for increased wait time for individual tasks. |

| Reduced processing time compared to individual task processing, especially for large volumes of tasks. | Batch processing may not be suitable for tasks requiring immediate responses. |

| Enhanced data integrity due to comprehensive validation and error detection before completion of the batch. | Potential for significant data loss if the batch processing fails. |

| Suitable for large datasets or computationally intensive tasks. | Potential for system overload if the batch size is too large. |

| Cost savings due to optimized resource utilization. | Complexity in managing and monitoring large batches. |

Batch processing offers a structured approach to managing tasks, but it’s essential to weigh the advantages against potential drawbacks. Careful consideration of task volume, resource availability, and response time requirements is crucial when implementing batch processing.

Identifying Tasks Suitable for Batching

Batching tasks effectively streamlines workflows and optimizes resource utilization. Careful selection of tasks suitable for batching is crucial for achieving these benefits. Understanding the characteristics of batchable tasks is paramount for maximizing efficiency and minimizing wasted effort.Identifying the right tasks for batch processing requires a keen understanding of the task’s nature, dependencies, and potential for grouping similar operations.

This involves analyzing the characteristics of each task to determine its compatibility with batch processing principles.

Types of Tasks Well-Suited for Batching

Effective batch processing relies on identifying tasks with certain commonalities. Tasks that exhibit repetitive actions, require similar resources, and have minimal individual variability are prime candidates for batching. A good example of this would be generating reports from multiple data sources.

- Data Processing Tasks: Tasks involving the processing of large datasets, such as data transformation, cleansing, or aggregation, are often ideal for batching. The repetitive nature of processing numerous data points lends itself well to batching, allowing for significant efficiency gains.

- Report Generation: Creating reports from various data sources often involves similar steps for each data point. Batching these report generation processes can dramatically reduce processing time and improve reporting turnaround.

- Email or SMS Marketing Campaigns: Sending out mass communications, such as marketing emails or SMS messages, can be significantly optimized by batching the process. Grouping similar communications allows for greater efficiency and reduced individual processing time.

- Automated Order Processing: Handling multiple orders simultaneously, like in e-commerce platforms, often involves repetitive steps for each order. Batching the processing of these orders can streamline the entire order fulfillment process.

- File Conversion Tasks: Converting a large number of files from one format to another, such as converting multiple documents from .docx to .pdf, is another excellent candidate for batch processing. The repetitive nature of this conversion process aligns perfectly with batching principles.

Comparison of Batchable and Non-Batchable Tasks

Tasks suitable for batch processing often exhibit a high degree of repetition and a low degree of individual variability. Conversely, tasks requiring unique, customized actions or significant individual processing are generally not suitable for batching.

| Characteristic | Batchable Tasks | Non-Batchable Tasks |

|---|---|---|

| Repetition | High | Low |

| Variability | Low | High |

| Individual Processing Time | Often uniform or similar | Often variable |

| Resource Requirements | Similar for each task | Varying per task |

| Examples | Data processing, report generation, mass communications | Customer support interactions, personalized recommendations |

Criteria for Determining Batch Suitability

Several factors determine whether a task is suitable for batching. The key criteria are: the task’s degree of repetition, the potential for grouping similar actions, and the task’s impact on overall workflow efficiency.

A task can be effectively batched if its individual steps are repetitive, its resource needs are consistent, and its impact on the overall workflow is minimal.

The ability to group similar operations, and the task’s potential to reduce overall processing time, are critical factors.

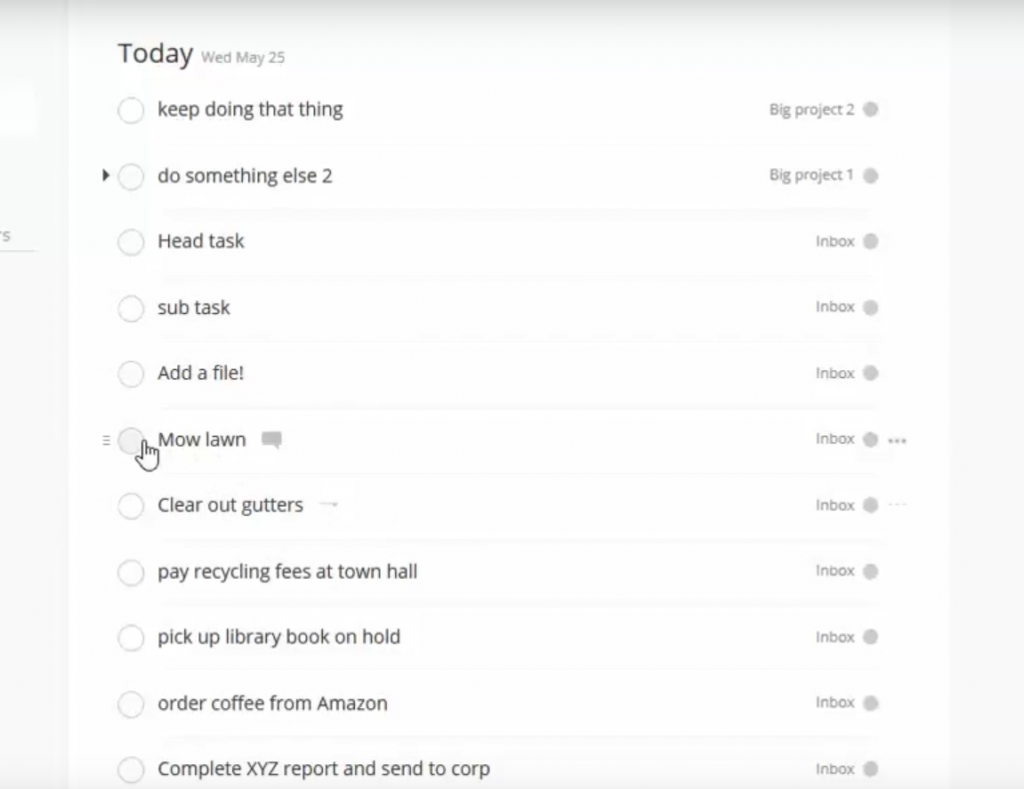

Strategies for Batching Tasks

Batching tasks effectively is crucial for optimizing workflow and resource allocation. By grouping similar tasks together, businesses can streamline operations, reduce context switching, and improve overall efficiency. This approach can lead to significant time savings and cost reductions, particularly in repetitive processes.Different batching strategies cater to various needs and contexts. Understanding these strategies and their application is key to maximizing the benefits of batch processing.

This section delves into various approaches, illustrating how they can be implemented and applied in diverse industries.

Time-Based Batching

Time-based batching schedules tasks based on predetermined intervals. This approach is beneficial for recurring activities or processes that need to be executed at specific times. For instance, daily report generation, or nightly database backups are prime examples of time-based batching. This method ensures consistent execution, minimizing the risk of missed deadlines and maintaining schedule reliability. A well-defined time-based batching strategy helps maintain the organization’s operational cadence.

Volume-Based Batching

Volume-based batching groups tasks based on the accumulated volume of work. This strategy is suitable for situations where the quantity of tasks is a primary factor. A customer order fulfillment system, for example, might batch orders together until a certain volume threshold is reached, enabling efficient resource allocation and streamlined processing of orders. This approach focuses on optimizing the handling of larger quantities of work.

Priority-Based Batching

Priority-based batching groups tasks according to their importance and urgency. This is especially valuable for tasks requiring immediate attention. Urgent customer support requests, for instance, can be prioritized and processed in a separate batch to ensure swift responses and improved customer satisfaction. This approach helps businesses to focus on critical tasks first, maximizing the impact of their efforts.

Step-by-Step Creation of a Batch Process

To establish a robust batch process, a structured approach is necessary. The steps typically involve defining the batch criteria, identifying suitable tasks, creating a scheduling mechanism, implementing a processing system, and finally, monitoring and evaluating the process’s effectiveness.

- Define Batch Criteria: Clearly articulate the rules for grouping tasks. This includes determining the volume, time, or priority parameters.

- Identify Suitable Tasks: Select tasks that are compatible with the chosen batching strategy. This might involve evaluating task frequency, dependencies, and resource requirements.

- Establish a Scheduling Mechanism: Develop a system for triggering and managing batch execution. This could include automated scheduling tools or manual interventions based on the batching strategy.

- Implement a Processing System: Design a system that effectively handles and processes the batched tasks. This system needs to account for any data dependencies or task dependencies within the batch.

- Monitor and Evaluate: Continuously track the performance of the batch process. This involves measuring efficiency, identifying bottlenecks, and adjusting the strategy as needed.

Examples of Successful Batching Strategies Across Industries

Batching strategies are widely employed across various industries. For example, in manufacturing, batching production runs optimizes resource utilization and minimizes material waste. In the finance sector, batch processing of transactions improves operational efficiency and reduces processing time. In customer service, batching support tickets based on priority ensures prompt resolution of critical issues.

Comparison of Batching Strategies

| Strategy | Criteria | Suitability | Advantages | Disadvantages |

|---|---|---|---|---|

| Time-Based | Fixed intervals | Recurring tasks | Predictable execution, consistent schedule | May not be optimal for varying task volumes |

| Volume-Based | Accumulated volume | Large quantities of similar tasks | Efficient resource allocation, optimized processing | May lead to delays for urgent tasks |

| Priority-Based | Urgency and importance | Tasks needing immediate attention | Improved customer satisfaction, quick resolution | Requires clear prioritization criteria, potential for bias |

Tools and Technologies for Batch Processing

Batch processing, when implemented effectively, can significantly enhance productivity and efficiency in various applications. This section explores specific software tools designed to facilitate and streamline the batch processing of tasks. These tools provide robust mechanisms for handling large volumes of data or operations, thereby automating repetitive procedures and freeing up human resources for more strategic initiatives.

Software Tools Supporting Batch Processing

Several software tools are specifically designed to support batch processing, enabling organizations to automate complex workflows and reduce manual intervention. These tools provide a structured approach to managing and executing a series of tasks in an organized manner, thereby maximizing the benefits of batch processing.

- Apache Kafka: A distributed streaming platform, Kafka excels in handling high-volume data streams. Its robust message queuing capabilities allow applications to send and receive data in batches. This enables asynchronous communication, improving application responsiveness and scalability, particularly beneficial for large-scale data processing pipelines. Kafka’s distributed nature ensures fault tolerance and horizontal scalability, critical for handling massive datasets.

The system’s publish-subscribe model simplifies the coordination of tasks across various components within a batch process.

- Apache Spark: A unified analytics engine, Spark is a powerful tool for batch processing large datasets. It leverages in-memory computing to achieve significant performance gains compared to traditional disk-based processing. Spark’s ability to handle various data formats (structured and unstructured) makes it suitable for diverse batch processing needs. Its high-level APIs allow for easy development of batch processing applications, with features like Resilient Distributed Datasets (RDDs) and DataFrames.

The distributed architecture allows for processing vast datasets across multiple machines, which can be crucial in situations with large datasets.

- AWS Batch: A fully managed batch computing service provided by Amazon Web Services, AWS Batch simplifies the execution of batch jobs on Amazon EC2 instances. It streamlines the provisioning of compute resources, enabling users to focus on the batch job logic rather than the underlying infrastructure management. AWS Batch efficiently handles job scheduling, resource allocation, and task monitoring, improving overall batch processing efficiency.

Its integration with other AWS services enhances its utility in complex workflows, streamlining the entire batch processing pipeline.

Technical Aspects of Batch Processing in AWS Batch

AWS Batch provides a user-friendly interface for defining and executing batch jobs. Batch jobs are typically composed of individual tasks, grouped together for execution. The service manages the allocation of compute resources based on the defined job specifications, ensuring that tasks are processed efficiently.

AWS Batch automatically manages the scaling of compute resources based on job demand. This ensures optimal resource utilization and prevents under or over-allocation, which can significantly impact costs.

A key aspect of AWS Batch is its ability to handle dependencies between tasks. By defining these dependencies, users can ensure that tasks are executed in the correct order, guaranteeing the integrity of the overall batch process. Error handling mechanisms within AWS Batch allow for graceful recovery from failures, maintaining data consistency and preventing cascading failures. The job queuing and prioritization features allow for managing different job priorities within the batch process.

Comparison of Batch Processing Tools

| Tool | Functionality | Key Features for Batch Processing |

|---|---|---|

| Apache Kafka | Distributed streaming platform | High-volume data streaming, message queuing, asynchronous communication, fault tolerance, horizontal scalability |

| Apache Spark | Unified analytics engine | In-memory computing, diverse data formats, high-level APIs, distributed architecture |

| AWS Batch | Fully managed batch computing service | Job scheduling, resource allocation, task monitoring, dependency management, error handling, compute resource scaling |

Optimizing Batch Processes

Batch processing, while offering efficiency gains, demands careful optimization to ensure maximum output and minimal errors. Effective optimization strategies reduce processing time, minimize potential issues, and enhance the overall reliability of batch operations. This section delves into key strategies for achieving these goals.

Strategies for Maximum Efficiency

Optimizing batch processes for maximum efficiency requires a multifaceted approach, encompassing task prioritization, resource allocation, and process automation. Careful planning and scheduling of tasks within a batch are crucial. Prioritizing tasks based on their criticality or dependencies minimizes delays and ensures that high-priority items are processed promptly. Efficient resource allocation, including hardware and software, is essential for maintaining processing speed and preventing bottlenecks.

Automation of repetitive tasks further streamlines the process, reducing human error and increasing throughput.

Minimizing Delays and Errors

Minimizing delays and errors in batch processing is paramount for maintaining operational stability. Proactive monitoring of batch progress, coupled with robust error handling mechanisms, is critical. Regular checks on the status of individual tasks within a batch can identify potential bottlenecks or delays early, enabling timely intervention. Implementing effective error handling mechanisms, such as rollback procedures and retry strategies, minimizes the impact of unexpected issues and ensures data integrity.

Importance of Monitoring and Evaluating Batch Processes

Monitoring and evaluating batch processes is vital for continuous improvement and proactive problem-solving. Real-time monitoring tools provide insights into the performance of individual tasks and the overall batch process. Data collected from these tools allows for identifying patterns and trends, which can then be leveraged to refine processes, improve resource allocation, and enhance efficiency. Regular evaluation of key performance indicators (KPIs) helps identify areas for improvement and ensures that batch processes meet established targets.

Performance Metrics for Batch Processes

A robust understanding of batch process performance requires a comprehensive set of metrics. These metrics help in assessing the effectiveness and efficiency of the batch processing system.

| Metric | Description | Importance |

|---|---|---|

| Throughput | The number of tasks processed per unit of time. | Indicates the overall processing capacity of the system. |

| Processing Time | The average time taken to process a single task. | Highlights potential bottlenecks and areas for optimization within the batch process. |

| Error Rate | The percentage of tasks that resulted in errors. | Identifies the robustness of the process and potential weaknesses in error handling. |

| Resource Utilization | The percentage of available resources (CPU, memory, network) used during processing. | Helps identify bottlenecks and optimize resource allocation to improve efficiency. |

| Completion Rate | The percentage of tasks completed successfully within a specific timeframe. | Provides insight into the success rate and reliability of the batch process. |

| Batch Completion Time | The total time taken to complete the entire batch of tasks. | Indicates the overall efficiency of the batch process and its ability to meet deadlines. |

Error Handling and Recovery in Batch Processes

Robust batch processing necessitates comprehensive error handling and recovery mechanisms. Unanticipated errors during execution can lead to data loss, wasted resources, and significant delays in downstream processes. Implementing effective strategies for detecting, managing, and recovering from errors is critical for ensuring the reliability and efficiency of batch jobs.

Importance of Error Handling

Error handling in batch processes is paramount for maintaining data integrity and preventing the propagation of errors. A well-designed error handling strategy ensures that failures are detected promptly, preventing the accumulation of errors that could render the entire batch process useless. This proactive approach safeguards against unexpected disruptions, minimizing the impact of failures on the overall system performance and data quality.

Errors can result in corrupted data, lost transactions, and inconsistent system states, impacting subsequent processes and potentially requiring costly manual intervention. Robust error handling helps maintain data accuracy and integrity, reducing the risk of errors cascading through the system.

Strategies for Handling Errors During Batch Processing

Effective error handling involves a multi-layered approach. Early detection and logging of potential issues are crucial. Implementing checkpoints, where the batch process is periodically saved, is vital for facilitating recovery. Error logging should include details such as the error type, timestamp, location in the process, and associated data. This comprehensive information enables rapid identification and resolution of issues.

Methods for Recovering from Errors within a Batch Process

Recovery from errors in batch processing often involves reverting to a previous stable state. Using checkpoints allows the process to resume from the last successful point. Rollback mechanisms are essential for undoing any changes made since the last checkpoint. Careful consideration of the rollback strategy is critical to minimize data corruption and ensure consistency. A well-defined rollback procedure helps to restore the system to a known good state, mitigating the impact of errors.

Error Handling Techniques and Applications

| Error Handling Technique | Description | Application |

|---|---|---|

| Logging | Recording errors, including type, timestamp, and affected data. | Tracking errors and facilitating debugging. |

| Checkpointing | Saving the batch process state at regular intervals. | Enabling resumption from a previous state after errors. |

| Rollback | Reverting changes made since the last checkpoint. | Ensuring data consistency and avoiding data corruption. |

| Retry Mechanism | Attempting to re-execute failed operations after a delay. | Handling transient errors like network issues or temporary resource unavailability. |

| Error Queuing | Storing failed tasks in a queue for later processing. | Dealing with errors that cannot be immediately resolved, allowing for batch processing of errors. |

| Alerting | Notifying administrators or operators of errors. | Enabling timely intervention and resolution of errors. |

Examples of Batch Processing in Action

Batch processing is a crucial aspect of many modern business operations, streamlining tasks and enhancing efficiency across various industries. Its ability to handle large volumes of data and transactions simultaneously reduces processing time and improves overall productivity. This section explores practical examples of batch processing in action, demonstrating its widespread application and benefits.

Batch Processing in Finance

Financial institutions extensively utilize batch processing for tasks like transaction processing, reconciliation, and reporting. Automated systems process large volumes of transactions such as credit card payments, wire transfers, and loan applications in batches. This approach ensures timely and accurate processing of financial data, reducing manual intervention and potential errors. By batching these transactions, banks and financial institutions can maintain high throughput and ensure smooth operations.

This significantly improves efficiency in managing and reconciling financial accounts, especially when dealing with a high volume of transactions. For example, a bank might batch process thousands of loan applications daily, enabling swift processing and reduced turnaround times for customers.

Batch Processing in Manufacturing

Manufacturing industries leverage batch processing for various tasks, including order fulfillment, inventory management, and quality control. A manufacturing company might use batch processing to automatically generate production schedules based on customer orders, manage inventory levels, and track product quality throughout the production cycle. Batch processing allows for the efficient handling of large orders, enabling manufacturers to optimize their production lines and maintain accurate inventory records.

This approach is critical for maintaining the flow of production and delivering products on time to customers. For example, a car manufacturer might use batch processing to schedule the production of a specific model based on customer orders.

Batch Processing in Online Retail

Online retailers often employ batch processing for tasks like order processing, inventory updates, and customer reporting. Batch processing allows them to handle a high volume of orders efficiently. The system processes orders in batches, updating inventory and generating shipping labels. This ensures that orders are processed accurately and quickly, leading to a smoother customer experience. A key aspect of this is the ability to update inventory in bulk, which is critical for managing stock levels and preventing stockouts.

For example, an online retailer might batch process orders placed within a specific timeframe, automatically updating inventory levels and generating shipping labels for each order in the batch.

Detailed Example: Batch Processing for Online Product Ordering

A detailed example of batch processing in online retail involves the ordering of products. Imagine an online retailer that receives hundreds or even thousands of orders daily. A batch process can be implemented to manage these orders. When a customer places an order, the order data is collected and temporarily stored. At regular intervals (e.g., every hour or every few hours), a batch processing system retrieves these orders.

This system then performs the following tasks:

- Order validation: The system validates each order to ensure that the customer has sufficient funds and that the product is in stock. Invalid orders are flagged for manual review.

- Inventory updates: If an order is valid, the system updates the inventory levels by deducting the ordered quantities from the available stock.

- Shipping label generation: The system generates shipping labels for each valid order, incorporating the shipping address and other relevant details.

- Order confirmation: The system sends order confirmation emails to the customer.

- Payment processing: The system processes payments for the valid orders.

This batch process ensures that orders are processed efficiently and accurately, maintaining the smooth functioning of the online store. It automates the handling of large volumes of orders, freeing up human resources for other tasks and contributing to a better customer experience.

Scalability and Performance Considerations

Batch processing systems, while effective for handling large volumes of data, require careful consideration of scalability and performance to ensure they remain efficient as data volumes increase. Anticipating potential bottlenecks and implementing strategies to mitigate them is crucial for long-term success. This section will detail the factors impacting scalability, methods for maintaining performance, and how to address potential issues.

Factors Affecting Batch Process Scalability

Several factors influence the scalability of batch processes. Data volume, processing complexity, system architecture, and the chosen tools and technologies all play critical roles. The complexity of the data transformation logic and the number of steps involved in the batch process directly correlate to the computational resources needed. An increase in the volume of data being processed will invariably strain system resources, and a poorly designed system architecture will severely limit scalability.

Finally, the specific tools and technologies employed can significantly impact the processing speed and the system’s ability to handle increasing data loads.

Ensuring Batch Process Performance

Maintaining performance as data volumes increase requires a multi-faceted approach. Firstly, efficient algorithms and optimized code are paramount. Secondly, the underlying infrastructure, including hardware and software, needs to be capable of handling the anticipated workload. Thirdly, strategies for parallel processing and distributed computing can significantly enhance performance. Finally, a robust monitoring system is essential to identify and resolve performance bottlenecks proactively.

Implementing these strategies is crucial for maintaining a smooth and efficient batch processing system.

Anticipating and Addressing Performance Bottlenecks

Predicting and mitigating performance bottlenecks is essential for a successful batch processing system. Careful analysis of processing steps, identification of potential resource constraints (CPU, memory, network bandwidth), and implementation of load balancing techniques are vital. Using profiling tools to identify performance bottlenecks in the code, coupled with strategic optimization techniques, can greatly improve efficiency. For instance, utilizing caching mechanisms to store frequently accessed data can reduce the load on the database and improve processing speed.

Impact of Data Volume on Batch Processing Speed

The volume of data directly affects the processing time of a batch job. Higher volumes necessitate more processing power, storage capacity, and potentially more sophisticated algorithms. The table below illustrates a hypothetical scenario showcasing the impact of data volume on batch processing speed. It’s important to note that these times are highly dependent on the specific processing tasks, hardware, and software used.

| Data Volume (in GB) | Estimated Batch Processing Time (hours) |

|---|---|

| 10 | 1 |

| 100 | 10 |

| 1000 | 100 |

| 10,000 | 1000 |

Security Considerations in Batch Processing

Batch processing, while efficient for large-scale data manipulation, presents unique security challenges. Ensuring the integrity and confidentiality of sensitive data throughout the entire batch operation is paramount. Compromises in this area can lead to significant financial losses, reputational damage, and regulatory penalties. Robust security measures are essential to mitigate these risks.

Security Implications of Batch Processing

Batch processing often involves handling large volumes of sensitive data, including financial transactions, personal information, and intellectual property. This concentrated processing increases the potential attack surface, making it a prime target for malicious actors seeking to compromise data integrity or exfiltrate sensitive information. Unauthorized access, data breaches, and modification of critical data during batch operations can have serious consequences.

Protecting Sensitive Data During Batch Operations

Implementing stringent security measures throughout the entire batch processing lifecycle is critical. This includes safeguarding data at rest, in transit, and during processing. Employing robust access controls, encryption techniques, and intrusion detection systems are key components of a comprehensive security strategy. Regular security audits and vulnerability assessments are vital to identify and address potential weaknesses.

Data Encryption and Access Control in Batch Processes

Data encryption is a fundamental security measure for protecting sensitive data during batch processing. Encrypting data at rest, in transit, and during processing minimizes the risk of unauthorized access or disclosure. Strong encryption algorithms and key management procedures are essential to maintain data confidentiality. Access control mechanisms, including user authentication and authorization, restrict access to sensitive data and batch processes based on the principle of least privilege.

This prevents unauthorized users from accessing or manipulating critical data.

Security Best Practices for Batch Processing

Implementing a structured set of security best practices is crucial to mitigating risks and maintaining data integrity. These practices encompass various stages of the batch process, from data input to output.

| Security Best Practice | Description |

|---|---|

| Data Encryption | Encrypt sensitive data both at rest and in transit using strong encryption algorithms. Employ secure key management practices to safeguard encryption keys. |

| Access Control | Implement strict access controls, limiting access to batch processes and sensitive data to authorized personnel only. Utilize the principle of least privilege to grant minimal necessary access. |

| Regular Security Audits | Conduct regular security audits and vulnerability assessments to identify and address potential security vulnerabilities in the batch processing system. |

| Secure Logging and Monitoring | Implement robust logging and monitoring mechanisms to track and analyze batch processing activities, including user actions, data modifications, and system events. |

| Input Validation and Sanitization | Validate and sanitize all input data to prevent injection attacks and ensure data integrity. Prevent malicious code from entering the batch process. |

Final Review

In conclusion, mastering batch processing empowers individuals and organizations to optimize their operations, enhance productivity, and achieve significant cost savings. By carefully considering task selection, implementing appropriate strategies, and employing the right tools, you can significantly improve the efficiency and effectiveness of your workflows. This guide has provided a comprehensive overview of batching tasks, equipping you with the knowledge to implement this powerful technique.